In today’s rapidly evolving technological landscape, the discourse surrounding artificial intelligence (AI) and natural language processing (NLP) often gravitates towards the grandeur of large language models (LLMs). However, an intriguing shift is taking place, one that champions the smaller yet surprisingly robust small language models (SLMs). As AI developers and businesses grapple with the implications of ever-expanding model sizes, it is crucial to acknowledge the myriad advantages presented by SLMs, not only in performance but also in their alignment with sustainability—a position I firmly believe is necessary for the future of technology.

SLMs, with their significantly fewer parameters, debut a level of efficiency that has the potential to democratize AI. Unlike their hulking counterparts, SLMs can be integrated into everyday devices, from smartphones to personal computers, thus bridging the gap between advanced technological tools and general accessibility. This democratization isn’t just desirable; it’s imperative. As society marches toward greater reliance on AI, the methods of implementation must evolve toward inclusivity.

The Financial Weight of Large Models

The development costs associated with LLMs are staggering. Google’s Gemini 1.0 Ultra is said to have incurred an astronomical investment of approximately $191 million. Such financial enormity raises pivotal concerns about the sustainability of our current trajectory in AI development. The debate surrounding the cost-effectiveness of LLM training must extend beyond mere dollar amounts into the realm of energy consumption. Researchers from the Electric Power Research Institute have illustrated that a single query to an LLM consumes energy equivalent to ten Google searches. Here lies an opportunity where SLMs shine brightly: by drastically reducing both energy requirements and financial hurdles, they emerge as a more attractive, viable solution.

The reality is that while LLMs generate interest with their awe-inspiring capabilities, the average company—and more importantly, average users—may find themselves priced out of the market. If we wish to foster innovation and facilitate widespread adoption of AI technologies, prioritizing accessible models must be at the forefront of the conversation.

Task-Specific Brilliance: The Case for Tailored Solutions

Delving deeper into the realm of SLMs, their true potential lies not in their breadth of application but rather in their depth of focus. While LLMs can tackle a broad array of general tasks, SLMs excel in specific domains. Take for instance medical applications—SLMs are particularly adept at managing patient interactions or summarizing lengthy reports. Such specialization fosters efficiency that cannot be overstated. As the well-respected computer scientist Zico Kolter from Carnegie Mellon University rightly asserts, for myriad tasks, an 8 billion-parameter model can yield impressive results.

In a world increasingly marked by a deluge of information, the benefits of task-oriented models become palpably clear. Users are searching for precision, not ambiguity; thus, tailoring our AI innovations to their specific needs is not merely advantageous—it’s crucial.

Innovative Techniques: Knowledge Distillation and Pruning

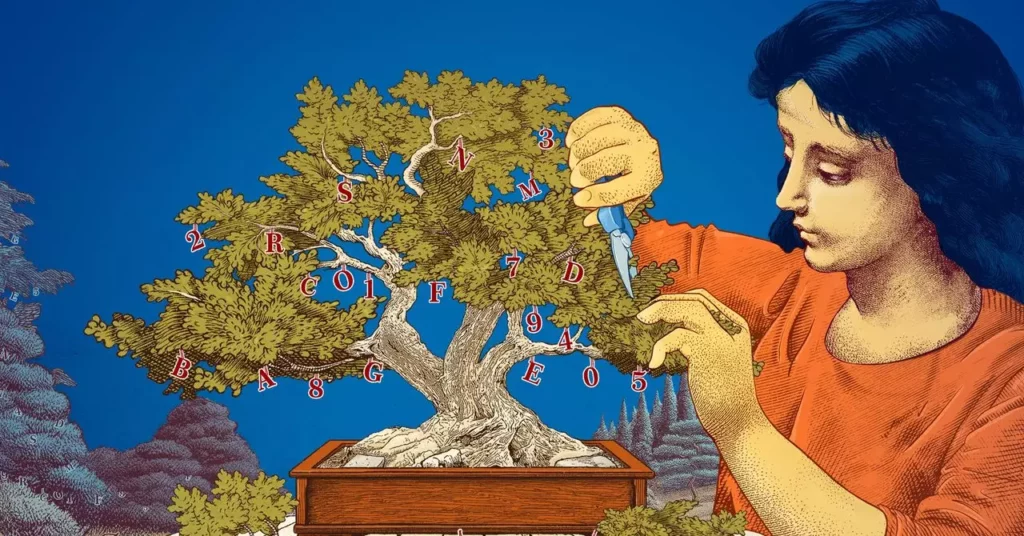

Research into the AI sector is advancing rapidly, yielding ingenious techniques like knowledge distillation and pruning that enhance the performance of smaller models. Knowledge distillation allows SLMs to benefit from the robust training of LLMs, using curated data that is well-structured and high-quality instead of raw, chaotic internet outputs. This ensures that the small models not only compete but often outperform their larger relatives for specialized tasks.

Moreover, pruning offers another strategy to bolster efficiency by creatively removing excess parameters without sacrificing quality. The paradigm set forth by legends like Yann LeCun, who predicted that up to 90% of a network’s parameters could be effectively eliminated, serves as an optimistic vision for the future of AI development. It encourages us to rethink our previous assumptions about model size and performance, pushing the boundaries of what’s possible.

The Agility of Small Language Models

What truly sets SLMs apart is their nimbleness and low-risk platform for experimentation. As Leshem Choshen from MIT-IBM Watson AI Lab articulates, smaller models provide an invaluable space for researchers to explore without the overwhelming stakes that accompany working with massive models. This flexibility is essential as it allows for creative and innovative exploration in a field that moves at lightning speed.

In a climate where technological revolution is the norm, those who can adapt and grow will succeed. SLMs, with their accessible size and efficient capabilities, provide a platform that aligns perfectly with the rapidly changing demands of AI development—an adaptation that many in the tech world would be wise to consider.

The future of AI is not merely about crafting the largest and most powerful models. Instead, it pivots toward ingenuity, efficiency, and sustainability—principles firmly embedded in the ethos of SLMs. As our reliance on AI continues to expand, we cannot ignore the imperative to be responsible stewards of technology, ensuring that our innovations lead not just to greater capabilities, but also to a more sustainable and inclusive future for all.

Leave a Reply