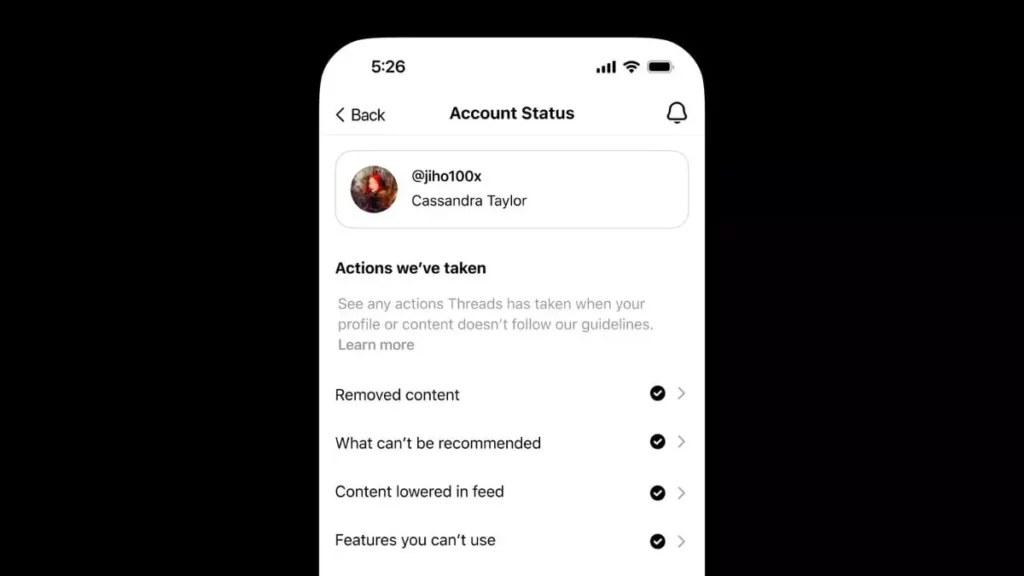

Threads has recently unwrapped its much-buzzed-about Account Status feature, a tool that aims to redefine the way users interact with content on the platform. The shift from a passive observational approach to a more engaging participatory model is enticing. It promises to illuminate the dark corners of content moderation by giving users insight into their own posts and profiles. But is this truly a revolutionary move, or merely an elaborate distraction that does little to resolve the deeper issues surrounding content management?

The concept of transparency is often lauded in the tech world; however, in this case, it comes with hefty caveats. By promising users visibility into why their content might have been removed or seen diminished visibility, Threads opens up a can of worms. Are users really prepared for an intricate breakdown of moderation actions? Understanding that an algorithm—fueled by subjective guidelines—has determined the fate of one’s content can be unsettling. A feature that is designed to enhance user confidence could inadvertently lead to paranoia about censorship.

The Double-Edged Sword of Moderation

One cannot overlook the inherent contradictions that accompany the implementation of the Account Status feature. By emphasizing the balance between free speech and community safety, Threads risks creating an environment steeped in ambiguity. Who ultimately decides what is “harmful” content versus what’s vital for public debate? Such queries exacerbate the tension between upholding free expression and ensuring a respectful dialogue—an ever-evolving landscape that may not yield to a neat and tidy categorization.

The new reporting mechanism is pitched as a heroic recourse for users who believe moderation decisions have been misguided. However, there’s an undeniable skepticism surrounding its efficiency. Will the grievance process lead to meaningful dialogues with the platform, or will it become a burdensome task, mired in red tape? In dire need of clarity, users may find themselves lost in a labyrinth of bureaucratic delay—further complicating the path to genuine discourse.

The AI Factor: A Necessary Evil?

In an era when artificial intelligence is increasingly interwoven with content creation, Threads finds itself navigating murky waters. The platform’s decision to integrate AI-generated content into its community guidelines speaks to a foresight that is commendable, yet fraught with peril. As AI continues to gain traction, questions about authenticity loom large. Does AI-generated content deserve the same credibility as human expression, or does it dilute the authenticity of individual voices?

The discourse around AI content moderation is laden with complexities. It invites scrutiny about who takes the responsibility if an AI-generated post crosses ethical lines. Threads might be trailblazing in this regard, but the effectiveness of such initiatives in establishing a clearly defined framework of accountability remains a question mark.

A Brave New World or a Step Backwards?

One must also consider the potential downsides of this transparent approach. While an organization may project values centered around dignity and safety, the implications of their moderation policies could inadvertently lead to self-censorship among users. The fear of being flagged or having one’s content relegated to a shadowy corner of the platform may stifle authentic expression. With a community standard that aims to be all-inclusive, the risk is that users may find themselves polishing their words, fearing the repercussions of expressing opinions that toe the line of acceptability.

The meticulous balancing act of promoting free expression while curbing harmful content is a herculean task. Ultimately, Threads is treading a fine and often unstable line as it seeks to foster an environment that is both respectful and open. As they bravely forge ahead, the success of the Account Status feature remains to be seen; the stakes are high, and the potential for missteps is even higher.

As Threads embarks on this new journey, it could very well become a benchmark for social media platforms or a cautionary tale of how even the best intentions can fail under the weight of complexity.

Leave a Reply