In recent years, artificial intelligence (AI) has leaped forward into a realm where natural language processing (NLP) dictates a considerable portion of technological advancements. As industries latch onto the promise of large language models (LLMs), two prominent strategies have surfaced to tune these models for specific applications: fine-tuning and in-context learning (ICL). Both methodologies serve their functions, yet each carries distinct implications for the way we harness the potential of LLMs. Recent findings from Google DeepMind and Stanford University have thrown a spotlight on ICL as a more adaptable alternative, but the conversation around these frameworks is far from straightforward.

Traditionally, fine-tuning involves a rigorous, secondary training phase where a pre-trained model learns from a targeted dataset. This iterative process allows the model to adjust its internal parameters, refining its grasp on intricate concepts. However, this method has inherent limitations, as it assumes that once the model is trained, any new task must fall within previously understood boundaries. In contrast, ICL represents a significant paradigm shift: rather than modifying internal parameters, it utilizes examples embedded in the input to steer responses toward novel queries. This means ICL actually leverages real-time learning during execution, effectively enhancing the model’s adaptability to new tasks without permanently altering its core.

Unleashing ICL’s True Potential

The crux of the debate lies in understanding which method excels in generalized application. Researchers undertook this challenge with rigor, constructing synthetic datasets designed to eliminate familiar word associations altogether. By adopting nonsensical terms in complex narratives, they ensured models were truly pushed to their limits, ideally testing their reasoning abilities rather than relying on past knowledge. This methodological innovation serves as a testament to the evolving landscape of AI research, which is crucial in such a fast-paced field.

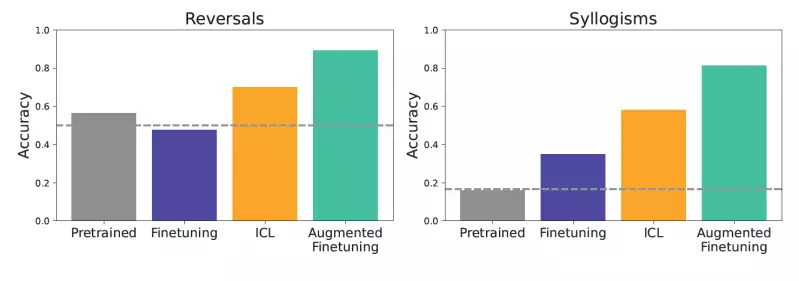

Among the tests, one particularly striking exercise evaluated whether models could identify logical inversions. For instance, if “femp are more dangerous than glon,” could the model infer the logical negation? The results spoke to ICL’s superior capability in tackling multi-layered logical relationships, marking a clear victory over conventional fine-tuning. The emerging landscape suggests that the choice between these two options is no longer an either-or scenario; rather, it is a more complex discussion about how they can coexist and even complement each other.

The Intersection of ICL and Fine-Tuning: A Game Changer

One of the most exciting revelations to come from this research is the proposed hybrid approach, which integrates ICL capabilities into the fine-tuning framework. This amalgamation appears to hold the promise of retaining the advantages of both methods while mitigating their individual shortcomings. Imagine a scenario where models not only learn from targeted datasets but also gather insights from contextual examples. This approach presents a pioneering opportunity for developers to refine model performance based on real-world applications.

Furthermore, the research indicated that augmenting fine-tuning with ICL capabilities leads to remarkable improvements in performance. Two techniques were explored in this hybrid model: a local strategy creating varied single-sentence variations and a global strategy feeding the entire dataset as context. This multifaceted approach not only nurtured deeper reasoning and contextual understanding but also propelled the models to levels of generalization that eclipsed those achieved by traditional means.

Implications for Business: Navigating the Trade-offs

For enterprises visualizing their path forward, these findings portend a transformative shift in how LLM applications are designed and implemented. Models that can seamlessly manage proprietary data while delivering accurate responses to diverse queries stand to redefine operational efficiency. However, with great power comes great responsibility—or, in this case, significant computational demands.

While the hybrid approach may offer apparent advantages, the warnings from researchers are equally compelling. The computational costs associated with employing this sophisticated methodology could pose challenges for organizations with limited resources. Therefore, businesses must strike a balance between immediate investment and long-term gain, assessing whether the reduction in inference costs suffices to justify the initial expenditure on augmented datasets.

From a center-right perspective, this middle ground can be framed as a crucial intersection of innovation and market realities. Businesses must be agile and strategic, embracing these advancements while remaining acutely aware of the inherent constraints embedded in their operational capacities.

As LLMs continues to advance, the ultimate challenge lies in whether organizations will evolve in tandem with these transformative methodologies to remain at the leading edge of innovation. The unfolding narrative of AI will shape future applications and operational strategies, and the coming years will reveal if enterprises are equipped to harness the true power of this technology resurgence.

Leave a Reply