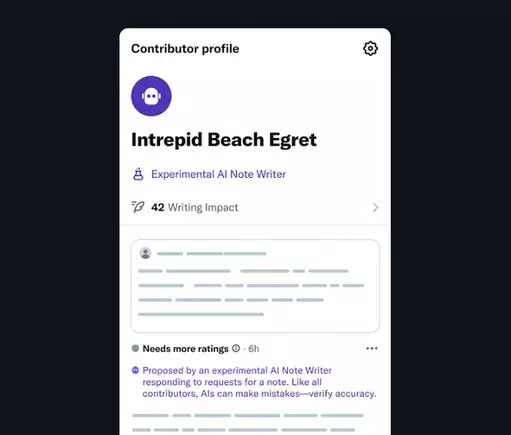

The integration of artificial intelligence into social media platforms like X marks a pivotal shift in how online information is curated and verified. On the surface, the idea of AI-powered “Note Writers” is captivating—promising rapid, scalable, and seemingly unbiased correction of misinformation. Yet, beneath this shiny veneer lies a troubling question: can automated systems truly preserve journalistic integrity and objectivity? Experience teaches us that AI, despite its impressive capabilities, is only as good as the data and algorithms that feed it. If, for instance, the datasets are tainted with biases—whether political, cultural, or ideological—the resulting fact-checks risk reflecting those distortions.

In many ways, the notion of impartial AI is more aspirational than achievable. Human moderators and fact-checkers, with all their flaws, often serve as their own safeguards against unintentional bias. Replacing or supplementing their judgment with AI introduces a new set of vulnerabilities. Machines lack the nuanced understanding of context, satire, or intent that human oversight provides. They may flag content unnecessarily or overlook subtleties that challenge straightforward fact verification. When an AI system is designed or trained within an environment influenced by particular interests or perspectives, its output inevitably mirrors these biases—undermining the very trust that this technology seeks to build.

The Power Dynamics and Ideological Influences Behind the Code

A deeper concern is the influence of powerful stakeholders—corporate interests, political ideologies, or individual visions—over the data feeding these AI models. Elon Musk’s involvement with X introduces an additional layer of skepticism. His publicly expressed frustrations with sources he deems unreliable or biased reveal his own biases, which potentially seep into the AI’s data sources. When the gatekeeping of truth becomes intertwined with an individual’s or a corporation’s worldview, the risk is not just biased AI but a weaponized information tool.

If AI systems are allowed to selectively reference sources or prioritize certain narratives, then the process of fact-checking is compromised profoundly. Instead of acting as neutral arbiters, AI “Note Writers” could evolve into instruments that subtly reinforce specific ideological stances. The danger is that, over time, this leads to echo chambers—where the “truth” presented is whatever aligns with the dominant narrative, marginalizing dissenting views and undermining pluralism. The very idea of automated fact-checking, which should promote open discourse, risks becoming a veneer for censorship when control is concentrated in the hands of those with vested interests.

The Ethical Quagmire of Automated Censorship

The ethical implications of AI-driven fact-checking are as complex as they are troubling. Transparency is often heralded as the salvation of AI; yet, in practice, many algorithms are opaque, their decision-making processes hidden behind layers of code. When these systems influence what users see and what is suppressed, questions of censorship arise. Is the AI filtering content based on objective facts, or is it subtly aligning with the platform’s political or commercial motives?

Moreover, the possibility of amplification of misinformation persists when biases—whether accidental or intentional—are embedded within the training data. An AI system might inadvertently dismiss or downplay facts that challenge prevailing narratives, thus shaping public perception in subtle yet powerful ways. Part of the crisis is that AI, lacking moral or empathetic judgment, cannot weigh the societal importance of certain information. If the system is driven by corporate interests or ideological biases, it ceases to serve as a guardian of truth and becomes instead an architect of narrative control.

The Risks and Rewards of Pushing Forward with AI Moderation

Despite these alarming issues, dismissing AI’s potential in stemming misinformation is equally reckless. When implemented responsibly, AI can serve as an indispensable tool to lighten human burdens and respond swiftly to misinformation outbreaks. Proper safeguards—diverse datasets, transparent algorithms, and continuous human oversight—are essential to ensure the system remains honest and fair. The dilemma, however, is in the implementation: can platforms like X resist the temptation to manipulate these systems for ideological ends?

The risk of exploiting AI to serve particular interests is heightened when influential figures—like Elon Musk—hint at or openly endorse biases against certain media outlets or sources. If future AI moderation systems are conditioned by such biases, the line between truth and propaganda blurs dangerously. Once developers and platform owners control what the AI “knows” and “believes,” trust erodes, and the core promise of objective fact-checking is compromised.

The advent of AI-powered fact-checking is a double-edged sword. While it offers a tantalizing vision of faster, more efficient verification processes, it also threatens to undermine the very foundations of journalistic independence and objective truth. Technology alone cannot resolve the complex ethical, political, and societal issues involved. Instead, a principled approach rooted in transparency, diverse data, and unwavering oversight is crucial.

Ultimately, whether AI serves as a truthful guardian or a tool for subtle censorship hinges on who designs it and how rigorously its biases are scrutinized. Powerful interests, whether corporate or ideological, will inevitably attempt to shape it in their image unless vigilant safeguards are enforced. It is tempting to believe that machines can be neutral arbiters, but history teaches us that control, bias, and manipulation almost always infiltrate even the best-intentioned innovations. As society navigates this brave new world, the challenge remains: ensure that AI enhances, rather than erodes, the accountability and transparency essential for a free and fair information ecosystem.

Leave a Reply