Microsoft’s latest AI-driven features within Windows 11 appear to herald a new dawn of digital interaction. The integration of tools like Copilot Vision promises to make our devices smarter, more intuitive, and seemingly more human-like in their responses. But beneath this veneer of innovation lies a critical question: are these enhancements truly progressive, or are they masking an underlying attempt at overreach? It’s tempting to get caught up in the hype of how seamlessly AI can analyze content or streamline multitasking. Yet, such advancements prompt us to scrutinize whether they genuinely empower users or subtly shift control towards the tech giant. This new wave of AI utility—touted as augmenting productivity—risks becoming a digital Trojan horse, designed not solely to serve but to entrench Microsoft’s dominance. The core issue is a delicate one: where do we draw the line between convenience and surveillance? As these technologies become more embedded into the fabric of personal computing, the risk of normalizing pervasive data collection grows. If our devices are constantly analyzing what we see and do, then are we truly gaining efficiency or sacrificing our privacy? The promises of smarter, more capable tools seem attractive, but they come with a cost that many users are often unprepared to shoulder.

Copilot Vision and the Future of Interaction—A Double-Edged Sword

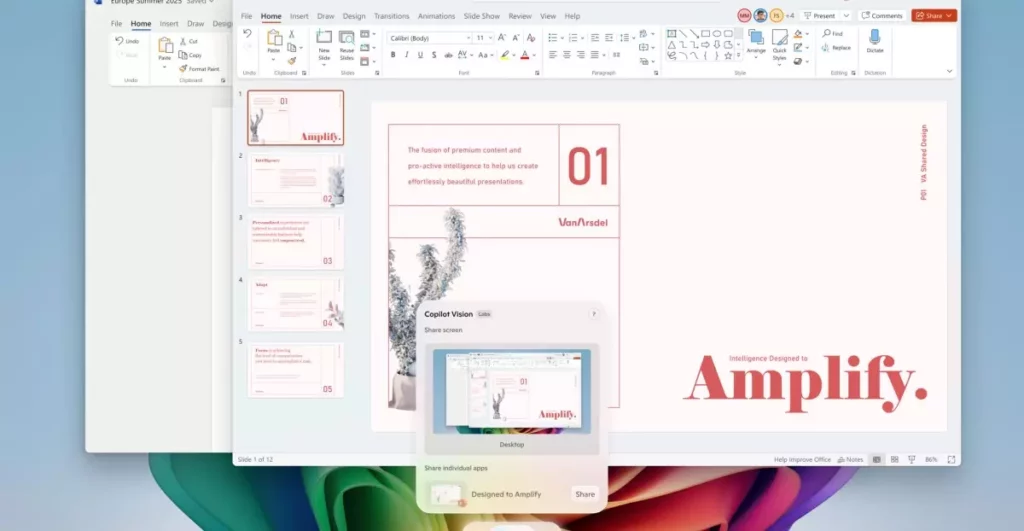

The showcase feature, Copilot Vision, signals a shift from command-based assumptions to real-time contextual understanding. It’s presented as an AI capable of analyzing multiple windows simultaneously, providing instant insights. In theory, this transforms multitasking into an almost effortless activity. Yet, this very sophistication could be a double-edged sword. The technology relies heavily on constant data processing, which means your screen contents—your private conversations, sensitive documents, or personal images—are under continuous scrutiny. Such capabilities, if not carefully managed, threaten to erode user trust. Is it necessary for a computer to understand the content of every application at all times? Or does this represent an overreach of AI’s capabilities, edging into a domain where privacy is compromised for fleeting gains in productivity? Moreover, these features are being selectively reserved for high-end hardware, raising concerns about digital inequity. Why should only users with cutting-edge devices be able to benefit from the most intelligent AI features? This tiered approach risks creating a digital divide, where those who cannot afford the latest hardware are left behind in the AI arms race. Such stratification undermines the notion of universal access and may foster a form of technological elitism, contradicting the ideal of technology as an equalizer.

The Risks of Concentrating Power Through AI

Microsoft’s aggressive push into AI-embedded ecosystems could inadvertently strengthen big tech’s grip on our digital lives. The promise of an ecosystem where AI handles everything from scheduling to content creation is appealing—until one recognizes the potential for overcentralization. When a single corporation controls the core AI functionalities, including features like “Click to Do,” the risk of monopolistic practices and data monopolies grows. How much authority should a private entity have over your digital environment? The line between helpful assistance and intrusive oversight is razor-thin. AI’s ability to interpret scenes, generate content, and automate tasks could be harnessed not just to serve users, but to subtly influence behaviors and preferences. Such influence, if left unchecked, could tilt the balance away from user autonomy toward corporate interests. Furthermore, the reliance on proprietary hardware or specific software versions to access advanced features exposes users to creeping obsolescence and dependency—a troubling trend that threatens digital self-determination.

The Privacy Dilemma: Convenience at What Cost?

No matter how “wizard-like” the AI becomes, it cannot escape the fundamental concern: privacy. As Microsoft’s AI features process sensitive content in real-time, the potential for misuse or breach escalates. Users are often lulled into complacency by the promise of smarter tools, but few understand the full scope of data collection involved. Every command, image, or webpage analyzed adds to a growing repository of personal information that could be vulnerable if security lapses occur. Even with optimistic disclaimers about data protection, the reality is that such extensive analysis creates vulnerabilities—especially if the AI’s operations are opaque. The more integrated these features become into our daily workflows, the more we unwittingly authorize radical levels of data harvesting. For center-right liberal-minded individuals, the core concern isn’t just about privacy but also about maintaining sovereignty over personal choices. When AI systems start interpreting our intentions and preferences, it’s not a stretch to imagine a future where consumer autonomy is subtly eroded by algorithmic influences. Microsoft’s gradual rollout strategy—allowing initial access with limitations—may seem cautious, but it’s not enough to assuage the fears that, eventually, these features will become inescapable and invasive.

Embracing the Future with Caution and Responsibility

Microsoft’s quest to embed AI into Windows 11 is emblematic of a broader Silicon Valley trend—where the promise of innovation often shadows the risk of overreach. As center-right liberal thinkers, it’s crucial to embrace technological progress while holding corporations accountable to ethical standards. The danger lies in unchecked growth of AI capabilities that prioritize corporate growth over user welfare and civil liberties. Embracing AI’s potential must go hand-in-hand with robust safeguards—transparency about data use, user control over information, and equitable access to advanced tools. Otherwise, the cost of unchecked innovation could be a loss of individual sovereignty and an erosion of trust in the very devices we rely on. Microsoft’s approach, while ambitious, should serve as a clarion call for balanced development—where technological breakthroughs do not come at the expense of fundamental rights. As we tread into this new era, it’s imperative that users remain critically engaged and vigilant. Only by demanding responsible AI deployment can we hope to shape a future where technology remains a tool of empowerment, not oppression.

Leave a Reply