In recent years, artificial intelligence (AI) has taken significant strides in automating everyday tasks, making life increasingly convenient for users. Among these advancements is the emergence of AI agents capable of handling specific actions, like making restaurant reservations. However, while such technologies show promise, they also expose the parameters within which they operate. For instance, a user might initiate a reservation process at a chosen restaurant, only to discover that a credit card is required to finalize the booking—a hitch that necessitates human intervention. This reliance on human oversight highlights the current limitations of AI in fully automating complex tasks.

One of the appealing features of these AI agents is their ability to interpret queries flexibly. When a user requests a “highly rated” restaurant, the AI evaluates reviews and ratings but does so within a narrow scope. While it displays a semblance of intelligence by searching for high-scoring establishments, the depth of its capabilities is still constrained. It does not incorporate a comprehensive analysis of external data sources, nor does it cross-reference reviews from platforms such as OpenTable with other relevant information available across the internet. All of this processing occurs locally, without utilizing cloud-based data, which further constrains the richness of the AI’s output.

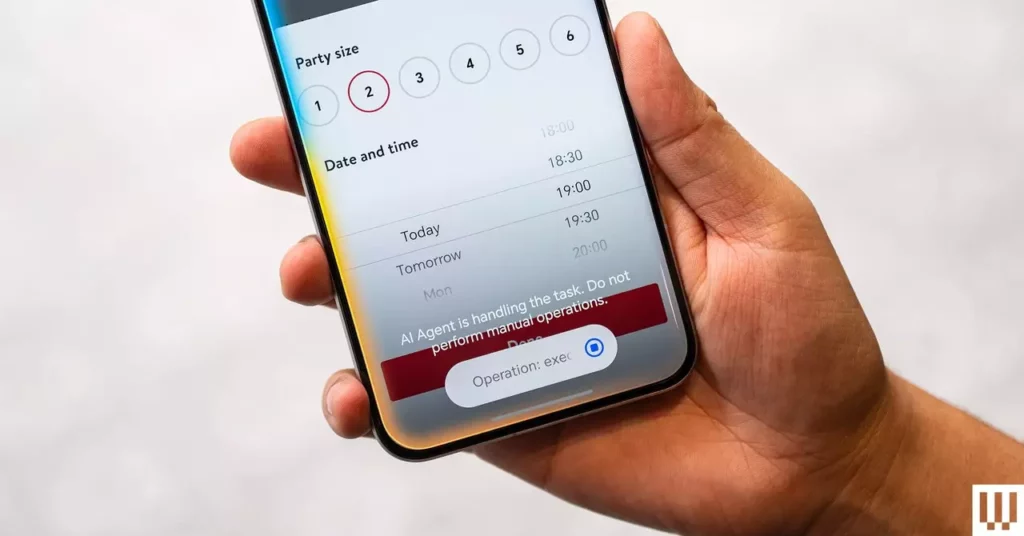

The term “agentic AI” has become a buzzword within the tech community, signifying a shift towards more autonomous systems. Companies like Google have been at the forefront, introducing models like Gemini 2, which are designed to perform actions on behalf of users. This model raises the possibility of generative user interfaces, changing the way we interact with technology. Traditionally, users engage with applications through direct manipulation of their interfaces. However, there is a growing movement toward AI systems that can generate those interfaces dynamically based on user commands, allowing for a more fluid interaction model.

The challenges faced by users when interacting with AI systems, like the restaurant reservation issue, mirror broader concerns regarding their reliability and effectiveness. Honor’s methodology, reminiscent of Teach Mode seen in the Rabbit R1, points to an interesting solution: manual training of an AI assistant to perform specific tasks. This novel approach does away with the need for API access, a conventional means through which applications communicate with one another. Instead, the AI learns by memorizing processes, thus theoretically enabling it to handle tasks more autonomously over time. However, this method also places considerable reliance on the user to train and craft effective commands, further complicating the ease of use.

As we delve deeper into the age of AI-driven automation, it becomes crucial to remain aware of the limitations and potential challenges that come with it. While the conveniences offered by AI agents, such as those managing restaurant reservations, are undeniable, they are still far from achieving complete independence. The future trajectory of AI will likely involve refining these systems, enhancing their learning capabilities, and ultimately empowering them to handle increasingly complex tasks without faltering. Thus, as we innovate, we must also prepare for the multifaceted interactions between human users and intelligent systems, paving the way for a more seamless technological experience.

Leave a Reply