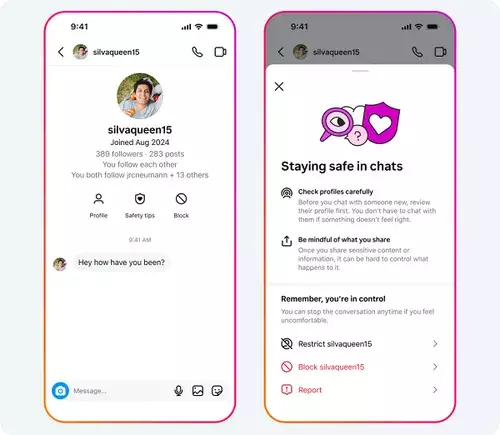

In an era where social media platforms dominate youthful socialization, Meta’s recent attempts to shield teenagers from online dangers seem superficially promising. The company’s initiatives—intended to create a safer environment—appear, at first glance, as tangible steps forward. Features like safety prompts, streamlined blocking tools, nudity filters, and location notices suggest that Meta is taking its responsibility seriously. Yet, beneath this veneer of progress lies a troubling disconnect: technological safeguards are not enough to combat the sophisticated tactics employed by predators, scammers, and malicious actors. The reality is, such measures are more cosmetic patches on a systemic issue. Real danger often lurks behind the very features designed to protect, exploiting the platform’s vulnerabilities and user complacency.

Meta’s approach is reactive rather than proactive—responding to crises after they emerge rather than preventing them altogether. It introduces visible prompts and easy-to-use tools, but these are akin to adding locks on a door already riddled with cracks and weaknesses. The systemic nature of online exploitation insists that platform safety cannot hinge solely on interface updates. Society’s digital addiction, combined with a growing undercurrent of predatory misconduct, demands a rigorous overhaul in design philosophy. If Meta truly aimed to prioritize teen safety, it would need to take a more comprehensive stance—think structural reforms, aggressive algorithmic moderation, and fostering a culture that discourages abuse. Instead, what’s mostly presented are band-aid solutions that appease regulators and the公众, but do little to dismantle the core problems.

The Persistent Failures Beyond Surface-Level Interventions

The stark reality is reflected in Meta’s own disclosures: almost 135,000 Instagram accounts have been removed for soliciting sexual content or comments targeting minors. This figure might seem impressive but, in truth, reveals a distressing failure—an inability to eliminate the problem at its root. The fact that hundreds of thousands more accounts continue to perpetuate predatory activities underscores a critical flaw: the platform’s current safety measures remain insufficient. Removing harmful accounts is essential, but it is a reactive process that plays catch-up with an ever-evolving landscape of online threats. Predators are adapting, becoming more discreet, more covert, and better at hiding their tracks. Meta’s dependence on account deletion and reporting mechanisms is a recognition of failure—an admission that prevention is far more complex than simply removing offending profiles.

Moreover, transparency about these figures, while seemingly responsible, exposes a harsh truth: the fight against online exploitation is an unending battle, and platforms like Meta are not winning decisively. Without substantial reforms—sophisticated detection algorithms, proactive monitoring, and a cultural shift within online communities—such efforts remain inadequate. The core issue extends beyond technical fixes. It involves societal responsibility, education, and a shared commitment to change the digital culture from the inside out.

Technological Limitations and Ethical Complexities

Meta’s safety features—nudity filters, location notices, and age restrictions—are steps in the right direction, but they highlight the limitations of relying solely on technology. An overwhelming majority of users have activated nudity protection, which signals some level of user awareness. Still, technology cannot replace the nuanced understanding needed to combat grooming, peer pressure, or exploitation. Human behavior is complex, and digital safeguards often fall short because they overlook the social and psychological dimensions of online risk.

Overregulation risks alienating users, inhibiting authentic social connections vital to adolescent development. Underregulation, on the other hand, exposes teens to imminent danger. Striking this balance is arguably the most difficult challenge facing social media companies today. Meta’s awareness of this dilemma is evident in its cautious support for raising the age limit for platform access. By advocating for stricter age restrictions—potentially banning access for those under 16—the company seems to acknowledge the need for upstream prevention. Still, enforcing these limits remains problematic: verifying age is notoriously difficult, and loopholes inevitably emerge.

This raises uncomfortable questions about corporate responsibility. Is Meta genuinely committed to protecting its youngest users, or are these measures merely strategic moves to appease regulators and deflect criticism? The truth is, technological solutions alone will never eradicate the risks. A cultural shift—fostered through digital literacy, parental oversight, and societal education—is indispensable. Without it, all the safety features in the world amount to superficial measures that can be bypassed or exploited.

The Broader Political and Social Dimensions

Support for stricter age restrictions and more vigilant moderation policies reflects a recognition that social media cannot be entirely self-regulating. However, policies are only as good as their enforcement. The challenge lies in implementing meaningful reforms within a landscape driven by profit motives and entertainment metrics. Too often, safety takes a backseat to engagement, profitability, and user retention.

From a centrist-liberal perspective, this dichotomy reveals an uncomfortable truth: the system incentivizes neglectful practices that put fleeting profits above long-term safety. Yet, the response from Meta and similar platforms should not be solely about regulatory compliance; it should involve genuine corporate accountability. The current approach, which often emphasizes transparency reports and reactive content removal, is insufficient. It’s akin to patching a sinking ship instead of fixing the leaks.

Incorporating a pragmatic, center-right ethos, one recognizes that empowering individuals—parents, educators, policymakers—must be part of the solution. Technology can serve as an aid, but it cannot replace collective responsibility and legal reforms that hold predators and exploiters accountable. The platform must evolve beyond superficial protections and actively foster a safer digital environment through responsibility, innovation, and societal engagement.

Without such a shift, the illusion of safety will persist, leaving the most vulnerable—teenagers—exposed to digital predators lurking behind the veneer of a “safe” platform. And ultimately, no amount of filters, prompts, or account removals can substitute for a societal commitment to protecting the integrity and innocence of the next generation.

Leave a Reply