In an era where artificial intelligence remains a battleground of corporate power and proprietary dominance, Alibaba’s recent open-source endeavors present a paradox that deserves scrutiny. The company touts its models as democratizing and advancing AI capabilities outside the shadows of tech giants like OpenAI and Google. But beneath this laudable veneer lies a more nuanced reality—one where the push for specialization and openness threatens to destabilize the very infrastructure that underpins responsible AI development.

The industry has long trusted the robustness of large, monolithic systems that, while opaque in operation, offer a semblance of reliability through centralized control. Alibaba’s move to fragment AI functionality into highly specialized models—separating reasoning, coding, translation, and other tasks—risks creating a patchwork ecosystem prone to inconsistency. While such specialization fosters excellence in narrow domains, it also fragments the pipeline, increasing the risk of incompatible outputs, maintenance overhead, and a lack of holistic oversight. This approach demands an increasing technical literacy from users, who now must navigate multiple models, each optimized for distinct tasks but potentially lacking seamless interoperability.

Furthermore, this division underscores a philosophical divergence from the industry’s historical preference for integrated systems. The gamble is whether this modular trend will foster innovation or sow discord among developers, enterprises, and regulators. From a skeptical lens, it seems Alibaba’s emphasis on technical dissection prioritizes short-term competitive advantage over systemic stability. The risk is that, in chasing performance spikes in isolated tasks, the industry may foster ecosystems that are brittle, more susceptible to fragmentation, and ultimately less reliable.

Open Source as a Double-Edged Sword

Alibaba’s licensing strategy marks one of the most conspicuous aspects of their boldness. By adopting the Apache 2.0 license, they democratize access—eliminating APIs, vendor lock-ins, and proprietary constraints. Theoretically, this is a triumph for innovation and democratization, empowering startups, SMEs, and larger firms to adapt and deploy cutting-edge models freely. However, the open-source model, especially at this scale, also introduces significant risks.

Without stringent oversight, the very transparency intended to build trust can be exploited. Malicious actors could manipulate or repurpose these models for unethical ends, magnifying concerns about cyber threats, misinformation, or even geopolitical exploitation. Moreover, the lack of a centralized authority in open-source communities can lead to fragmentation of standards, making it difficult for enterprises to maintain consistent quality control or compliance with evolving regulatory frameworks.

The core issue lies in who bears responsibility for responsible deployment. Historically, proprietary systems have carried this burden—employing internal guardrails, ethical checkers, and controlled rollouts. Open models shift that responsibility onto the community, which, while fostering innovation, risks overlooking the importance of ethical governance. Alibaba’s approach, therefore, treads a fine line: fostering community-driven growth at the potential expense of oversight and societal safeguards.

Performance and Practicality: The Double-Edged Promise

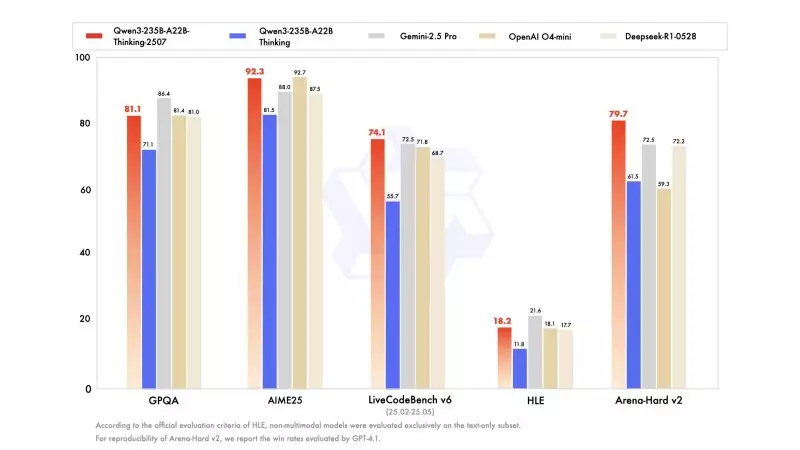

Alibaba’s models, especially the reasoning-focused Qwen3-235B-A22B, demonstrate outstanding benchmarks—scores that threaten to unseat established industry leaders. Yet, behind these impressive figures lies another question: do these models truly solve real-world problems better than their proprietary counterparts, or are they merely optimized for benchmark scores?

From a pragmatic perspective, the layered approach—separating reasoning from instructions—aims to produce more reliable outputs. But in complex scenarios requiring multi-modal reasoning, adaptation, or contextual understanding, the compartmentalization might lead to flaky integrations or breakdowns. The industry’s historical caution holds: impressive benchmarks do not always translate into real-world robustness. Techniques that excel in controlled settings often stumble when confronted with unpredictable variables characteristic of enterprise deployments.

Moreover, stability and predictability are paramount for enterprise adoption. Open models, especially those designed for extremes of speed and flexibility, risk sacrificing consistency. Without centralized oversight or rigorous validation pipelines, organizations might find themselves investing heavily in customization without guaranteed performance assurances.

The Ideology Behind the Innovation

Alibaba’s push towards open, specialized AI models reflects a broader ideological shift—one that values decentralization, transparency, and rapid innovation over monopolistic control. On the surface, this counters the arrogance of tech giants who treat their AI ecosystems as proprietary fortresses. Yet, this ideological stance warrants skepticism: wholesale democratization risks diluting quality control and obscuring accountability.

While the industry needs a counterweight to monopolistic tendencies, healthy competition must also reinforce standards of safety, reliability, and societal responsibility. Alibaba’s models, ambitious and accessible, could inadvertently cultivate an ecosystem where the lines between ethical use and malicious exploitation blur. The central question is whether this approach engenders a more resilient AI landscape or simply disperses risk among less regulated communities.

From a pragmatic, center-right liberal perspective—where fostering innovation responsibly is key—Alibaba’s strategy must be tempered with cautious governance. The pursuit of open, fast-moving AI models should not overshadow the essential need for oversight, accountability, and alignment with societal interests. Innovation is vital, but it cannot be an unchecked wild west that leaves society vulnerable to unforeseen consequences.

Alibaba’s latest AI initiatives boldly challenge the established order—an act both admirable and fraught with peril. While their emphasis on specialization, transparency, and open licensing represents a fresh wind, it also glimpses a future riddled with fragility if unchecked. The industry’s scramble for innovation must be balanced with insistence on reliability, oversight, and long-term societal benefit. Without this equilibrium, the democratization of AI risks devolving into chaos rather than progress, undermining the foundational pillars of ethical, trustworthy, and responsible AI development.

Leave a Reply