In the evolving landscape of artificial intelligence, the development of large language models (LLMs) has fundamentally changed the way machines understand and generate human-like text. However, despite their impressive capabilities, LLMs often struggle with accuracy, particularly in specialized domains. To address this challenge, researchers have introduced an innovative algorithm named Co-LLM, which enhances the collaborative potential between general-purpose LLMs and specialized counterparts, thus paving the way for more accurate and efficient information retrieval.

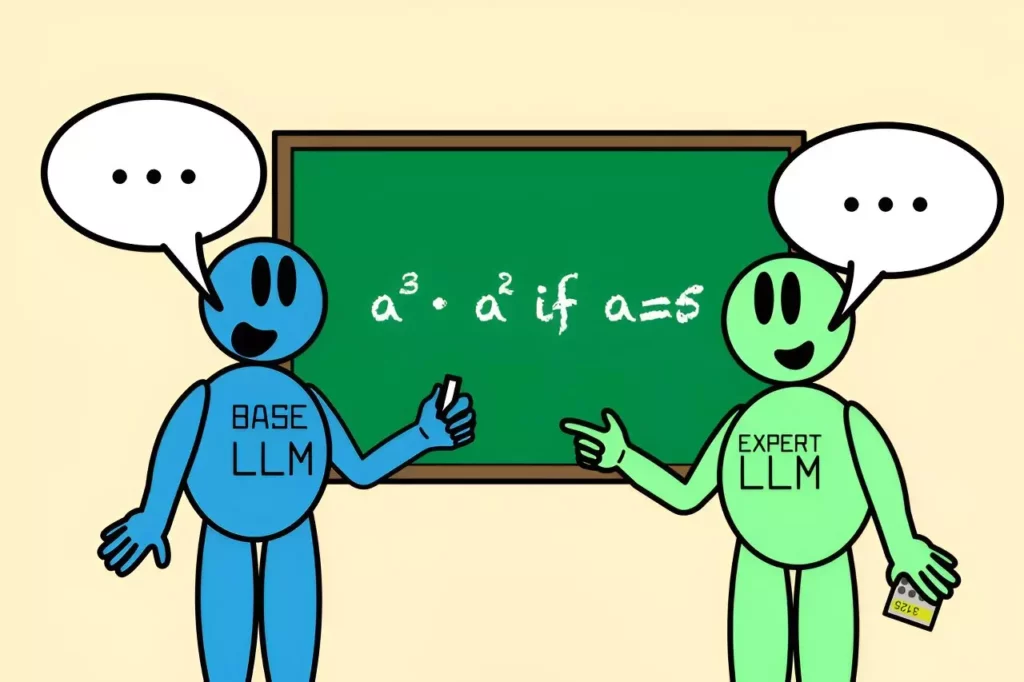

In many real-life scenarios, individuals often turn to experts when faced with questions beyond their realm of knowledge. This principle of collaboration is essential not just in professional settings but in the workings of artificial intelligence as well. Large language models, while versatile, can falter on niche questions, leading to erroneous outputs. A profound requirement exists for a method that enables these models to recognize when to seek expertise from specialized, more knowledgeable counterparts. MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) has conceptualized a solution with Co-LLM, which facilitates seamless interaction between models of varying specialties for better accuracy.

Co-LLM employs a structured approach that diverges from traditional methodologies. Instead of simply depending on labelling vast datasets to dictate when models should collaborate, this algorithm fosters a more natural collaboration process. By training the general-purpose LLM to identify its limits, Co-LLM effectively acts as an intermediary, ensuring the base model optimally utilizes the available expertise of specialized models when necessary.

At the heart of the Co-LLM concept lies a mechanism known as the “switch variable.” This variable functions as an internal project manager that determines precisely when the general-purpose model should call in the specialized model for assistance. As the base LLM produces a response, the switch evaluates the efficacy of each word it generates, assessing where input from the expert model could enhance the answer’s accuracy. This leads to responses that are not only correct but also articulated more efficiently, promoting a more streamlined production process.

Consider a scenario in which a user prompts Co-LLM to identify various extinct bear species. In this collaborative effort, the base LLM initiates the response while the switch variable determines where specialized input is necessary—such as adding critical details about the extinction timeline of specific species. The result is a coherent response that draws on the strengths of both models, providing richer, more accurate information than either could furnish alone.

The implications of Co-LLM extend across various disciplines, particularly in fields demanding specialized knowledge such as medicine and mathematics. For instance, when faced with a medical query like identifying the ingredients in a prescription drug, the Co-LLM approach enables the general-purpose LLM to leverage the expertise of a biomedical model, thereby significantly increasing the chances of correctness. By combining various models, researchers have demonstrated the algorithm’s capacity to produce far more precise answers than traditional models, which either work in isolation or through rigid training protocols.

A compelling example involved mathematical problem-solving: when a straightforward LLM miscalculated a problem, Co-LLM’s dual-model structure corrected the answer through collaboration with an expert math model. This ability to switch seamlessly between models according to context bolsters overall performance and demonstrates the critical value of situational awareness in AI-generated responses.

The potential of Co-LLM does not stop with its current functionalities. Researchers are actively exploring ways to enhance this algorithm further. For example, considerations for incorporating a more robust self-correction mechanism aim to address situations where the expert model fails to provide accurate information. This self-correction could allow Co-LLM to dynamically adjust answers in real time, maintaining accuracy even when faced with misinformation.

Moreover, plans to keep expert models up to date by training only the general-purpose model promise to extend Co-LLM’s capabilities. By automatically integrating the latest information into responses, the algorithm could transform how enterprise communications are managed, ensuring documents are both accurate and current.

The introduction of Co-LLM marks a significant development in the collaboration between language models, mimicking human judgment in calling upon experts when necessary. Its token-level routing offers a nuanced approach that improves both efficiency and performance, setting a precedent for future research in multi-model interactions. As the needs of users evolve, the Co-LLM algorithm stands poised to enhance not just the accuracy of LLM outputs but also their relevance, ultimately bridging the gap between generalized and specialized knowledge in artificial intelligence.

Leave a Reply